In context: Nobody within the trade is just too completely happy about fully counting on Nvidia’s chips for AI coaching, though many firms really feel they do not have an actual selection. Apple, nevertheless, took a distinct path and seems to be happy with the outcomes: it opted for Google’s TPUs to coach its massive language fashions. We all know this as a result of Cupertino launched a analysis paper on the processes it used to develop Apple Intelligence options. That too represents a departure for Apple, which tends to play issues near the chest.

Apple has revealed a shocking quantity of element about the way it developed its Apple Intelligence options in a newly-released analysis paper. The headline information is that Apple opted for Google TPUs – particularly TPU v4 and TPU v5 chips – as a substitute of Nvidia GPUs to coach its fashions. It’s a departure from trade norms as many different LLMs, comparable to these from OpenAI and Meta, sometimes use Nvidia GPUs.

For the uninitiated, Google sells entry to TPUs by way of its Google Cloud Platform. Prospects should construct software program on this platform with the intention to use the chips.

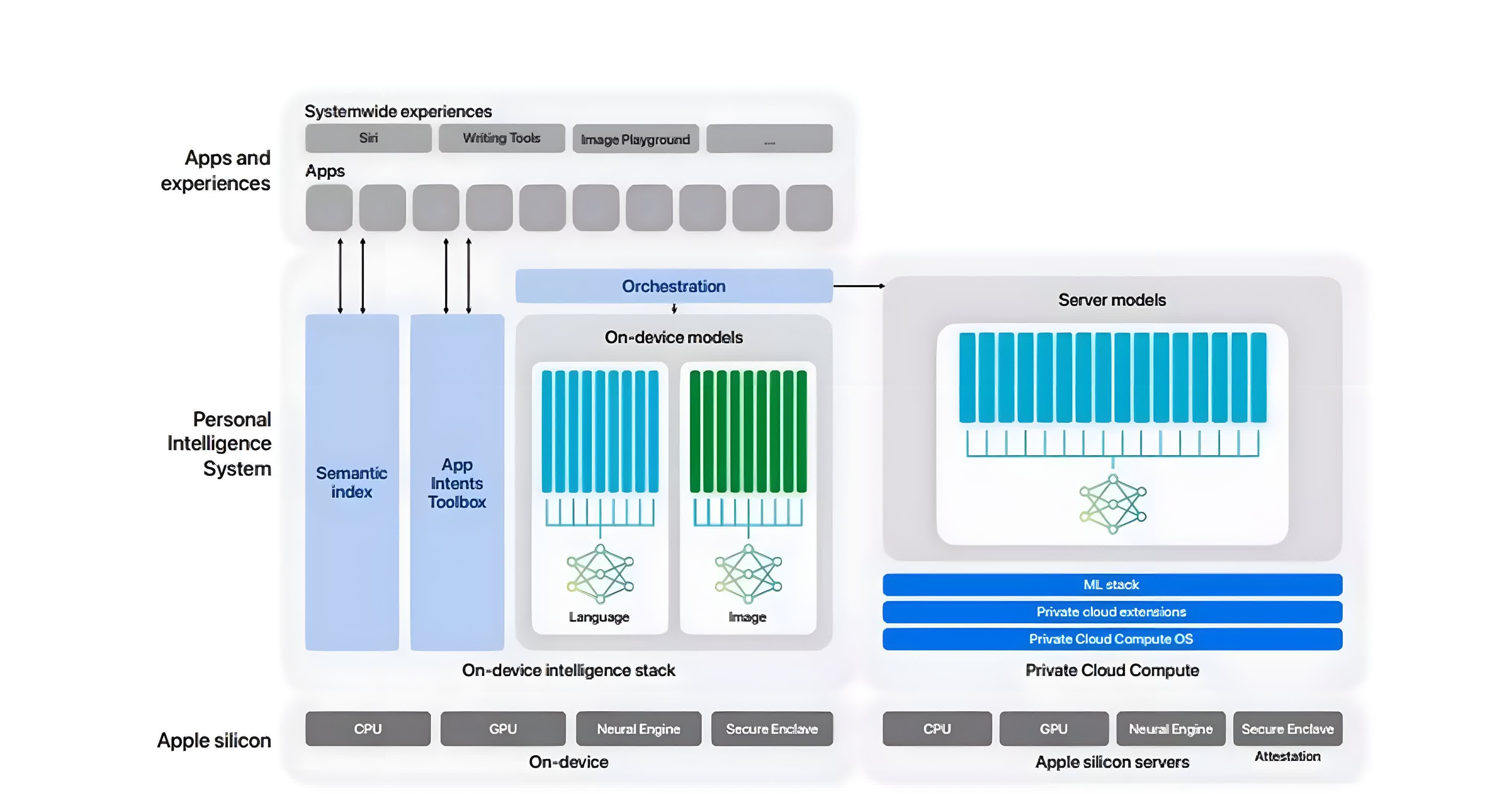

Here’s a breakdown of what Apple did.

The AFM-server, Apple’s largest language mannequin, was educated on 8,192 TPU v4 chips configured in 8 slices of 1,024 chips every, interconnected by way of a data-center community. The coaching course of for AFM-server concerned three levels: beginning with 6.3 trillion tokens, adopted by 1 trillion tokens, and concluding with context-lengthening utilizing 100 billion tokens.

The AFM-on-device mannequin, a pruned model of the server mannequin, was distilled from the 6.4 billion parameter server mannequin to a 3 billion parameter mannequin. This on-device mannequin was educated on a cluster of two,048 TPUv5p chips.

Apple used information from its Applebot internet crawler, adhering to robots.txt, together with varied licensed high-quality datasets. Moreover, chosen code, math, and public datasets have been used.

In keeping with Apple’s inner testing, each AFM-server and AFM-on-device fashions carried out properly in benchmarks.

Just a few issues of word. One, the detailed disclosure within the analysis paper is notable for Apple, an organization not sometimes identified for its transparency. It signifies a major effort to showcase its developments in AI.

Two, the selection to go for Google TPUs was telling. Nvidia GPUs have been in extraordinarily excessive demand and quick provide for AI coaching. Google doubtless had extra speedy availability of TPUs that Apple might entry shortly to speed up its AI growth, which is already a late arrival out there.

Additionally learn: Apple Intelligence AI rollout has been delayed, in the meantime Samsung claims Galaxy AI closes in on 200 million units

Additionally it is intriguing that Apple discovered that Google’s TPU v4 and TPU v5 chips supplied the mandatory computational energy for coaching their LLMs. The 8,192 TPU v4 chips that the AFM-server mannequin was educated on seem to supply comparable efficiency to Nvidia’s H100 GPUs for this workload.

Additionally, like many different firms, Apple is cautious of turning into overly reliant on Nvidia, which at the moment dominates the AI chip market. For an organization that’s meticulous about sustaining management over its know-how stack, the choice to go together with Google is especially smart.