In short: The AI inference market is increasing quickly, with OpenAI projected to earn $3.4 billion in income this yr from its ChatGPT predictions. This development presents alternatives for brand spanking new entrants like Cerebras Programs to seize a few of this market share. The corporate’s progressive method may result in sooner, extra environment friendly AI purposes, supplied its efficiency claims are validated via unbiased testing and real-world purposes.

Cerebras Programs, historically centered on promoting AI computer systems for coaching neural networks, is pivoting to supply inference providers. The corporate is utilizing its wafer-scale engine (WSE), a pc chip the dimensions of a dinner plate, to combine Meta’s open-source LLaMA 3.1 AI mannequin immediately onto the chip – a configuration that considerably reduces prices and energy consumption whereas dramatically growing processing speeds.

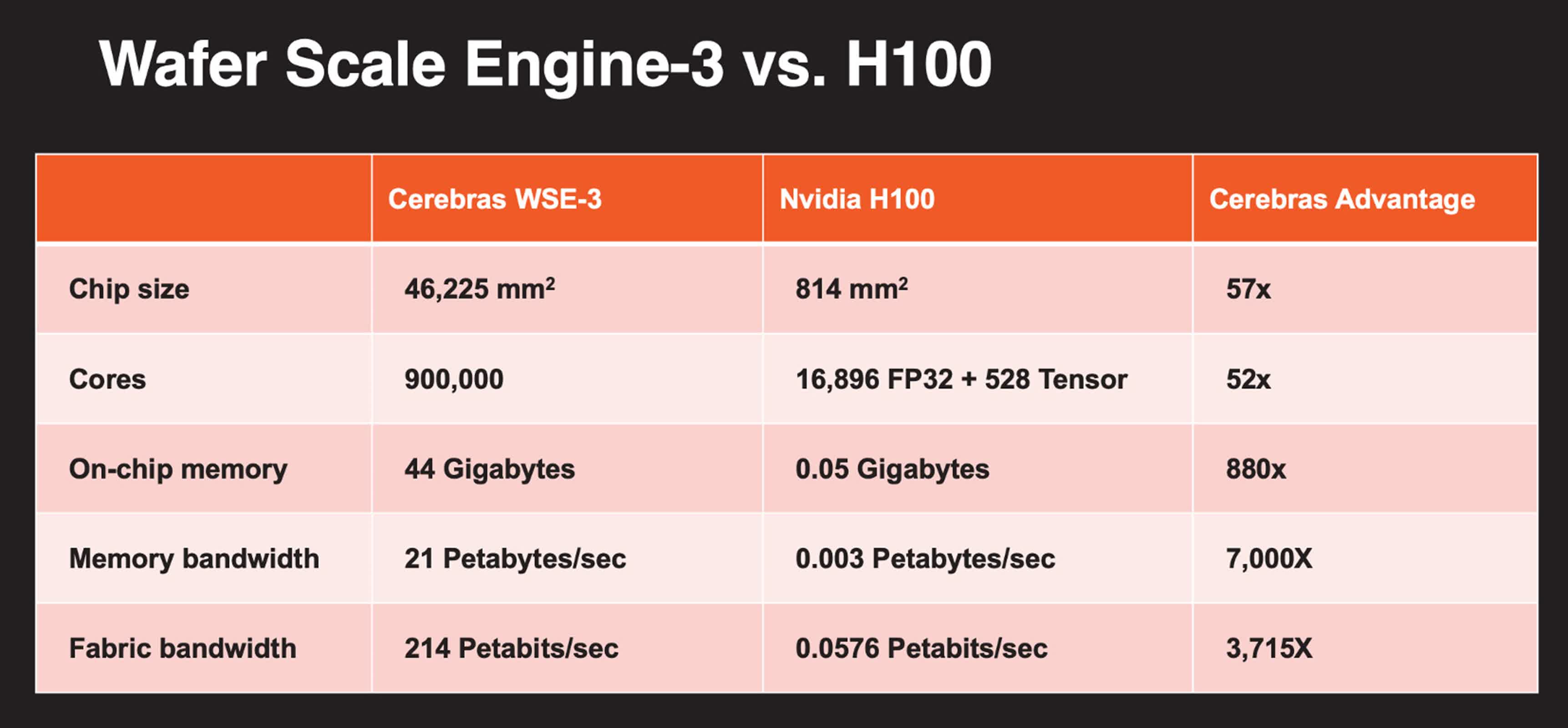

Cerebras’ WSE is exclusive in that it integrates as much as 900,000 cores on a single wafer, eliminating the necessity for exterior wiring between separate chips. Every core features as a self-contained unit, combining each computation and reminiscence. This design permits the mannequin weights to be distributed throughout the cores, enabling sooner information entry and processing.

Additionally learn: Opinion: Is anybody going to earn cash in AI inference?

“We truly load the mannequin weights onto the wafer, so it is proper there, subsequent to the core, which facilitates speedy information processing with out the necessity for information to journey over slower interfaces,” Andy Hock, Cerebras’ Senior Vice President of Product and Technique, advised Forbes.

The efficiency of Cerebras’ chip is “10 instances sooner than anything in the marketplace” for AI inference duties, with the flexibility to course of 1,800 tokens per second for the 8-billion parameter model of LLaMA 3.1, in comparison with 260 tokens per second on state-of-the-art GPUs, Andrew Feldman, co-founder and CEO of Cerebras, stated in a presentation to the press. This degree of efficiency has been validated by Synthetic Evaluation, Inc.

Past velocity, Feldman and chief technologist Sean Lie recommended that sooner processing allows extra advanced and correct inference duties. This consists of multiple-query duties and real-time voice responses. Feldman defined that velocity permits for “chain-of-thought prompting,” resulting in extra correct outcomes by encouraging the mannequin to point out its work and refine its solutions.

In pure language processing, for instance, fashions may generate extra correct and coherent responses, enhancing automated customer support methods by offering extra contextually conscious interactions. In healthcare, AI fashions may course of bigger datasets extra shortly, resulting in sooner diagnoses and customized therapy plans. Within the enterprise sector, real-time analytics and decision-making might be considerably improved, permitting corporations to answer market modifications with larger agility and precision.

Regardless of its promising know-how, Cerebras faces challenges in a market dominated by established gamers like Nvidia. Staff Inexperienced’s dominance is partly because of CUDA, a extensively used parallel computing platform that has created a powerful ecosystem round its GPUs. Cerebras’ WSE requires software program adaptation, as it’s basically completely different from conventional GPUs. To ease this transition, Cerebras helps high-level frameworks like PyTorch and provides its personal software program improvement package.

Cerebras can be launching its inference service via an API to its personal cloud, making it accessible to organizations with out requiring an overhaul of current infrastructure. The corporate plans to broaden its choices by quickly offering the bigger LLaMA 405 billion-parameter mannequin on its WSE, adopted by fashions from Mistral and Cohere. This growth may additional solidify its place within the AI market.

Nevertheless, Jack Gold, an analyst with J.Gold Associates, cautions that “it is untimely to estimate simply how superior it is going to be” till extra concrete real-world benchmarks and operations at scale can be found.