Can people be taught to reliably detect AI-generated fakes? How do they affect us on a cognitive stage?

OpenAI’s Sora system lately previewed a brand new wave of artificial AI-powered media. It most likely received’t be lengthy earlier than any type of reasonable media – audio, video, or picture – could be generated with prompts in mere seconds.

As these AI techniques develop ever extra succesful, we’ll must hone new abilities in essential considering to separate reality from fiction.

Up to now, Huge Tech’s efforts to gradual or cease deep fakes have come to little apart from sentiment, not due to an absence of conviction however as a result of AI content material is so reasonable.

That makes it robust to detect on the pixel stage, whereas different alerts, like metadata and watermarks, have their flaws.

Furthermore, even when AI-generated content material was detectable at scale, it’s difficult to separate genuine, purposeful content material from that supposed to unfold misinformation.

Passing content material to human reviewers and utilizing ‘neighborhood notes’ (info connected to content material, typically seen on X) supplies a potential resolution. Nonetheless, this generally entails subjective interpretation and dangers incorrect labeling.

For instance, within the Israel-Palestine battle, we’ve witnessed disturbing pictures labeled as actual after they had been faux and vice versa.

When an actual picture is labeled faux, this will create a ‘liar’s dividend,’ the place somebody or one thing can brush off the reality as faux.

So, within the absence of technical strategies for stopping deep fakes on the know-how facet, what can we do about it?

And, to what extent do deep fakes affect our decision-making and psychology?

For instance, when individuals are uncovered to faux political pictures, does this have a tangible affect on their voting behaviors?

Let’s check out a few research that assess exactly that.

Do deep fakes have an effect on our opinions and psychological states?

One 2020 research, “Deepfakes and Disinformation: Exploring the Impression of Artificial Political Video on Deception, Uncertainty, and Belief in Information,” explored how deep faux movies affect public notion, significantly concerning belief in information shared on social media.

The analysis concerned a large-scale experiment with 2,005 contributors from the UK, designed to measure responses to several types of deep faux movies of former US President Barack Obama.

Members had been randomly assigned to view one in all three movies:

- A 4-second clip displaying Obama making a stunning assertion with none context.

- A 26-second clip that included some hints concerning the video’s synthetic nature however was primarily misleading.

- A full video with an “academic reveal” the place the deep faux’s synthetic nature was explicitly disclosed, that includes Jordan Peele explaining the know-how behind the deep faux.

Key findings

The research explored three key areas:

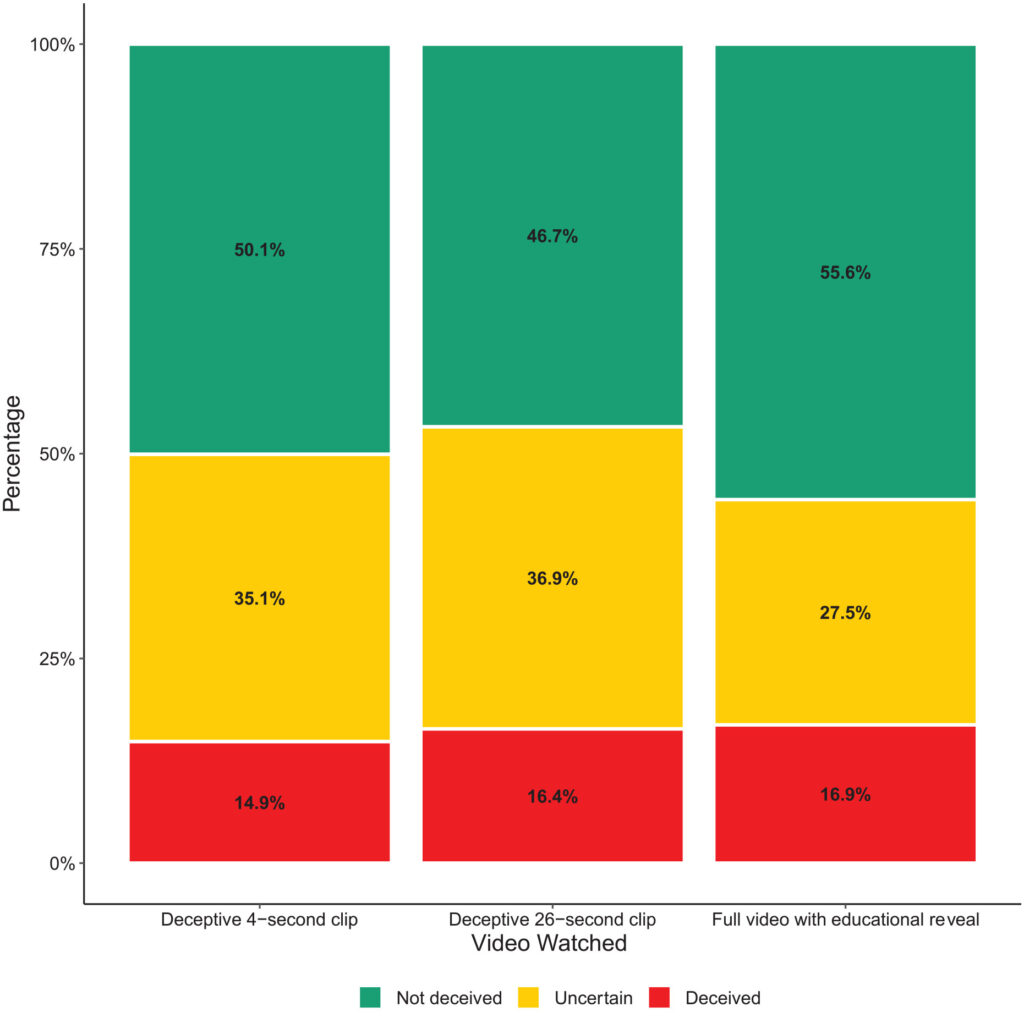

- Deception: The research discovered minimal proof of contributors believing the false statements within the deep fakes. The proportion of contributors who had been misled by the deep fakes was comparatively low throughout all therapy teams.

- Uncertainty: Nonetheless, a key outcome was elevated uncertainty amongst viewers, particularly those that noticed the shorter, misleading clips. About 35.1% of contributors who watched the 4-second clip and 36.9% who noticed the 26-second clip reported feeling unsure concerning the video’s authenticity. In distinction, solely 27.5% of those that seen the total academic video felt this fashion.

- Belief in information: This uncertainty negatively impacted contributors’ belief in information on social media. These uncovered to the misleading deep fakes confirmed decrease belief ranges than those that seen the tutorial reveal.

This reveals that publicity to deep faux imagery causes longer-term uncertainty.

Over time, faux imagery would possibly weaken religion in all info, together with the reality.

Comparable outcomes had been demonstrated by a newer 2023 research, “Face/Off: Altering the face of flicks with deepfake,” which additionally concluded that faux imagery has long-term impacts.

Individuals ‘keep in mind’ faux content material after publicity

Carried out with 436 contributors, the Face/Off research investigated how deep fakes would possibly affect our recollection of movies.

Members took half in a web based survey designed to look at their perceptions and recollections of each actual and imaginary film remakes.

The survey’s core concerned presenting contributors with six film titles, which included a mixture of 4 precise movie remakes and two fictitious ones.

Movies had been randomized and introduced in two codecs: half of the flicks had been launched by way of quick textual content descriptions, and the opposite half had been paired with temporary video clips.

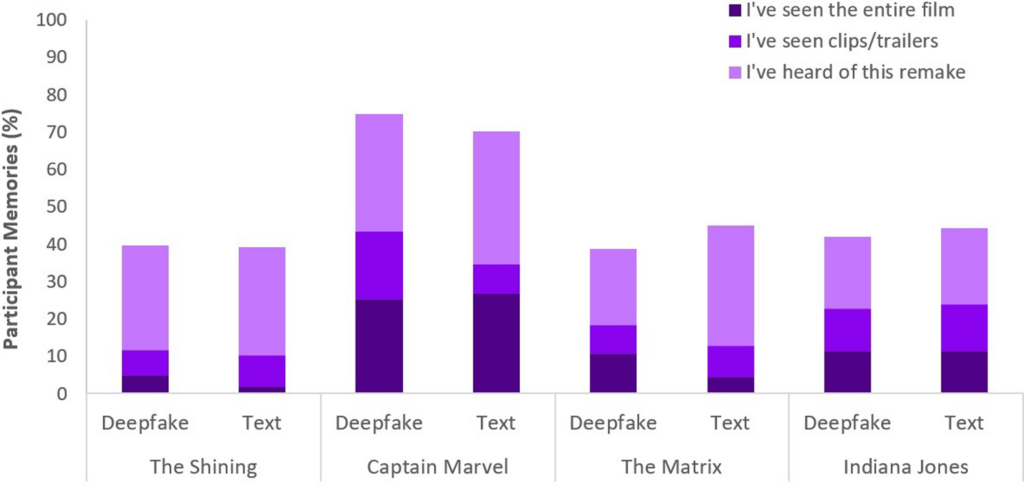

Fictitious film remakes consisted of variations of “The Shining,” “The Matrix,” “Indiana Jones,” and “Captain Marvel,” full with descriptions falsely claiming the involvement of high-profile actors in these non-existent remakes.

For instance, contributors had been informed a few faux remake of “The Shining” starring Brad Pitt and Angelina Jolie, which by no means occurred.

In distinction, the true film remakes introduced within the survey, resembling “Charlie & The Chocolate Manufacturing facility” and “Complete Recall,” had been described precisely and accompanied by real movie clips. This mixture of actual and pretend remakes was supposed to research how contributors discern between factual and fabricated content material.

Members had been queried about their familiarity with every film, asking if they’d seen the unique movie or the remake or had any prior information of them.

Key findings

- False reminiscence phenomenon: A key final result of the research is the revelation that just about half of the contributors (49%) developed false recollections of watching fictitious remakes, resembling imagining Will Smith as Neo in “The Matrix.” This illustrates the enduring impact that suggestive media, whether or not deep faux movies or textual descriptions, can have on our reminiscence.

- Particularly, “Captain Marvel” topped the record, with 73% of contributors recalling its AI remake, adopted by “Indiana Jones” at 43%, “The Matrix” at 42%, and “The Shining” at 40%. Amongst those that mistakenly believed in these remakes, 41% thought the “Captain Marvel” remake was superior to the unique.

- Comparative affect of deep fakes and textual content: One other discovery is that deep fakes, regardless of their visible and auditory realism, had been no simpler in altering contributors’ recollections than textual descriptions of the identical fictitious content material. This means that the format of the misinformation – visible or textual – doesn’t considerably alter its affect on reminiscence distortion inside the context of movie.

The false reminiscence phenomenon concerned on this research is extensively researched. It reveals how people successfully assemble or reconstruct false recollections we’re sure are actual after they’re not.

Everyone seems to be prone to setting up false recollections, and deep fakes appear to activate this conduct, which means viewing sure content material can change our notion, even once we consciously perceive it’s inauthentic.

In each research, deep fakes had tangible, doubtlessly long-term impacts. The impact would possibly sneak up on us and accumulate over time.

We additionally must do not forget that faux content material circulates to thousands and thousands of individuals, so small modifications in notion scale throughout the worldwide inhabitants.

What can we do about deep fakes?

Going to battle with deep fakes means combating the human mind.

Whereas the rise of faux information and misinformation has compelled folks to develop new media literacy in recent times, AI-generated artificial media would require a brand new stage of adjustment.

Now we have confronted such inflection factors earlier than, from images to CGI particular results, however AI will demand an evolution of our essential senses.

At present, we should transcend merely believing our eyes and rely extra on analyzing sources and contextual clues.

It’s important to interrogate the contents’ incentives or biases. Does it align with identified details or contradict them? Is there corroborating proof from different reliable sources?

One other key side is establishing authorized requirements for figuring out faked or manipulated media and holding creators accountable.

The US DEFIANCE Act, UK On-line Security Act, and equivalents worldwide are establishing authorized procedures for dealing with deep fakes. How efficient they’ll be stays to be seen.

Methods for unveiling the reality

Let’s conclude with 5 methods for figuring out and interrogating potential deep fakes.

Whereas no single technique is flawless, fostering essential mindsets is one of the best factor we will do collectively to reduce the affect of AI misinformation.

- Supply verification: Analyzing the credibility and origin of data is a elementary step. Genuine content material typically originates from respected sources with a monitor report of reliability.

- Technical evaluation: Regardless of their sophistication, deep fakes could exhibit delicate flaws, resembling irregular facial expressions or inconsistent lighting. Scrutinize content material and think about whether or not it’s digitally altered.

- Cross-referencing: Verifying info towards a number of trusted sources can present a broader perspective and assist verify the authenticity of content material.

- Digital literacy: Understanding the capabilities and limitations of AI applied sciences is vital to assessing content material. Training in digital literacy throughout faculties and the media, together with the workings of AI and its moral implications, might be essential.

- Cautious interplay: Interacting with AI-generated misinformation amplifies its results. Watch out when liking, sharing, or reposting content material you’re doubtful about.

As deep fakes evolve, so will the methods required to detect and mitigate hurt. 2024 might be revealing, as round half the world’s inhabitants is about to vote in main elections.

Proof means that deep fakes can have an effect on our notion, so it’s removed from outlandish to assume that AI misinformation might materially affect outcomes.

As we transfer ahead, moral AI practices, digital literacy, regulation, and significant engagement might be pivotal in shaping a future the place know-how amplifies, quite than obscures, the essence of the reality.