AI has a myriad of makes use of, however one in every of its most regarding functions is the creation of deep faux media and misinformation.

A brand new research from Google DeepMind and Jigsaw, a Google expertise incubator that displays societal threats, analyzed misuse of AI between January 2023 and March 2024.

It assessed some 200 real-world incidents of AI misuse, revealing that creating and disseminating misleading deep faux media, notably these concentrating on politicians and public figures, is the most typical type of malicious AI use.

Deep fakes, artificial media generated utilizing AI algorithms to create extremely lifelike however faux pictures, movies, and audio, have change into extra lifelike and pervasive.

Incidents like when express faux pictures of Taylor Swift appeared on X confirmed that such pictures can attain tens of millions of individuals earlier than deletion.

However most insidious are deep fakes focused at political points, such because the Israel-Palestine battle. In some circumstances, not even the very fact checkers charged with labeling them as “AI-generated” can reliably detect their authenticity.

The DeepMind research collected knowledge from a various array of sources, together with social media platforms like X and Reddit, on-line blogs, and media reviews.

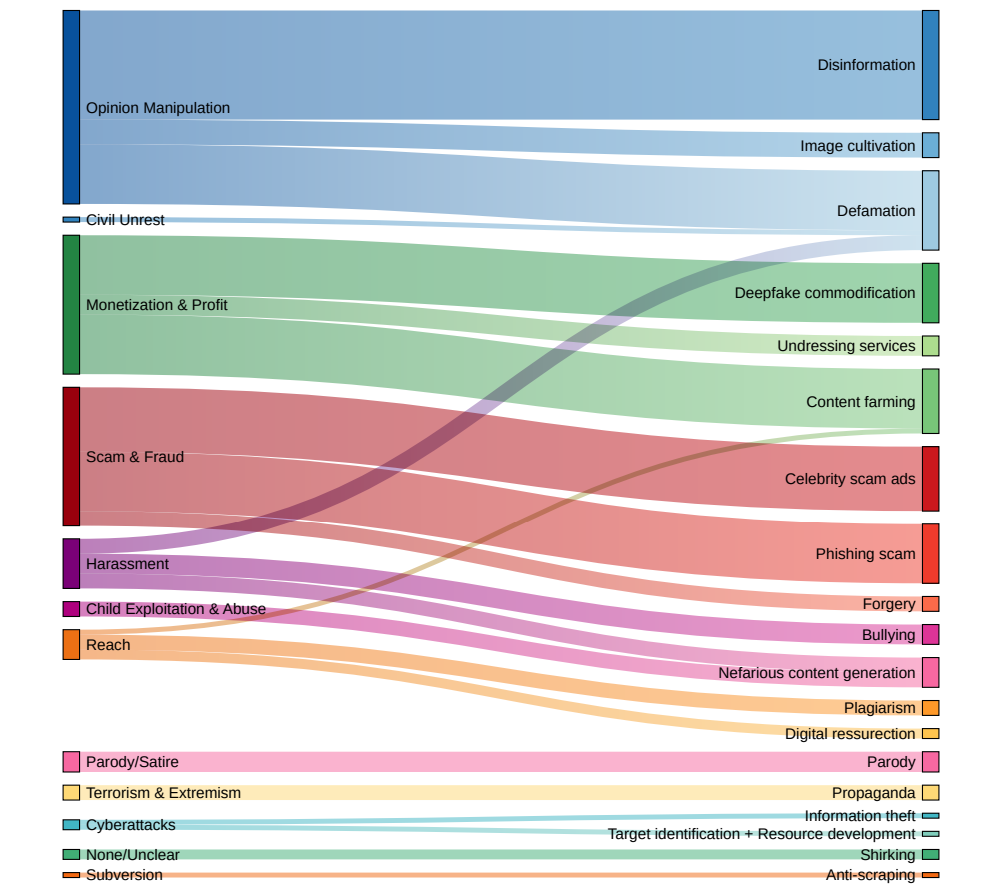

Every incident was analyzed to find out the precise sort of AI expertise misused, the meant function behind the abuse, and the extent of technical experience required to hold out the malicious exercise.

Deep fakes are the dominant type of AI misuse

The findings paint an alarming image of the present panorama of malicious AI use:

- Deep fakes emerged because the dominant type of AI misuse, accounting for almost twice as many incidents as the following most prevalent class.

- The second most continuously noticed sort of AI abuse was utilizing language fashions and chatbots to generate and disseminate disinformation on-line. By automating the creation of deceptive content material, unhealthy actors can flood social media and different platforms with faux information and propaganda at an unprecedented scale.

- Influencing public opinion and political narratives was the first motivation behind over 1 / 4 (27%) of the AI misuse circumstances analyzed. This discovering underscores the grave risk that deepfakes and AI-generated disinformation pose to democratic processes and the integrity of elections worldwide.

- Monetary achieve was recognized because the second commonest driver of malicious AI exercise, with unscrupulous actors providing paid companies for creating deep fakes, together with non-consensual express imagery, and leveraging generative AI to mass-produce faux content material for revenue.

- The vast majority of AI misuse incidents concerned readily accessible instruments and companies that required minimal technical experience to function. This low barrier to entry drastically expands the pool of potential malicious actors, making it simpler than ever for people and teams to have interaction in AI-powered deception and manipulation.

Nahema Marchal, the research’s lead creator and a DeepMind researcher, defined the evolving panorama of AI misuse to the Monetary Occasions: “There had been a whole lot of comprehensible concern round fairly refined cyber assaults facilitated by these instruments,” persevering with, “We noticed have been pretty widespread misuses of GenAI [such as deep fakes that] may go underneath the radar a bit of bit extra.”

Policymakers, expertise firms, and researchers should work collectively to develop complete methods for detecting and countering deepfakes, AI-generated disinformation, and different types of AI misuse.

However the fact is, they’ve already tried – and largely failed. Only in the near past, we’ve noticed extra incidents of kids getting caught up in deep faux incidents, displaying that the societal hurt they inflict will be grave.

At present, tech firms can’t reliably detect deep fakes at scale, and so they’ll solely develop extra lifelike and harder to detect in time.

And as soon as text-to-video techniques like OpenAI’s Sora land, there’ll be an entire new dimension of deep fakes to deal with.