AI analysis is fueled by the pursuit of ever-greater sophistication, which incorporates coaching methods to suppose and behave like people.

The tip objective? Who is aware of. The objective for now? To create autonomous, generalized AI brokers able to performing a variety of duties.

This idea is named synthetic normal intelligence (AGI) or superintelligence, which implies the identical factor.

It’s difficult to pinpoint exactly what AGI entails as a result of there’s nearly zero consensus on what ‘intelligence’ is, or certainly, when or how synthetic methods may obtain it.

Some even consider AI, in its present state, can by no means actually get hold of normal intelligence.

Professor Tony Prescott and Dr. Stuart Wilson from the College of Sheffield described generative language fashions, like ChatGPT, as inherently restricted as a result of they’re “disembodied” and haven’t any sensory notion or grounding within the pure world.

Meta’s chief AI scientist, Yann LeCun, mentioned even a home cat’s intelligence is fathomlessly extra superior than right this moment’s finest AI methods.

“However why aren’t these methods as good as a cat?” LeCun requested on the World Authorities Summit in Dubai.

“A cat can bear in mind, can perceive the bodily world, can plan complicated actions, can do some stage of reasoning—really a lot better than the largest LLMs. That tells you we’re lacking one thing conceptually large to get machines to be as clever as animals and people.”

Whereas these expertise might not be essential to realize AGI, there may be some consensus that transferring complicated AI methods from the lab into the true world would require adopting behaviors much like these noticed in pure organisms.

So, how can this be achieved? One strategy is to dissect components of cognition and work out how AI methods can mimic them.

A earlier DailyAI essay investigated curiosity and its capacity to information organisms towards new experiences and targets, fueling the collective evolution of the pure world.

However there may be one other emotion – one other important element of our existence – from which AGI may gain advantage. And that’s concern.

How AI can be taught from organic concern

Removed from being a weak point or a flaw, concern is one among evolution’s most potent instruments for retaining organisms protected.

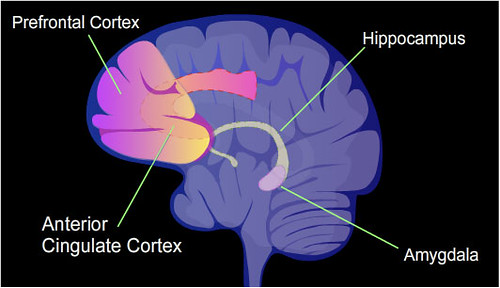

The amygdala is the central construction that governs concern in vertebrates. In people, it’s a small, almond-shaped construction nestled deep throughout the mind’s temporal lobes.

Typically dubbed the “concern heart,” the amygdala serves as an early warning system, continuously scanning incoming sensory data for potential threats.

When a risk is detected – whether or not it’s the sudden lurch of a braking automobile forward or a shifting shadow within the darkness – the amygdala springs into motion, triggering a cascade of physiological and behavioral modifications optimized for speedy defensive response:

- Coronary heart price and blood strain surge, priming the physique for “struggle or flight”

- Consideration narrows and sharpens, honing in on the supply of hazard

- Reflexes quicken, readying muscular tissues for split-second evasive motion

- Cognitive processing shifts to a speedy, intuitive, “higher protected than sorry” mode

This response will not be a easy reflex however a extremely adaptive, context-sensitive suite of modifications that flexibly tailor conduct to the character and severity of the risk at hand.

It’s additionally exceptionally fast. We grow to be consciously conscious of a risk round 300-400 milliseconds after preliminary detection.

Furthermore, the amygdala doesn’t function in isolation. It’s densely interconnected with different key mind areas concerned in notion, reminiscence, reasoning, and motion.

Why concern may profit AI

So, why does concern matter within the context of AI anyway?

In organic methods, concern serves as an important mechanism for speedy risk detection and response. By mimicking this method in AI, we will doubtlessly create extra sturdy and adaptable synthetic methods.

That is notably pertinent to autonomous methods that work together with the true world. Working example: regardless of AI intelligence exploding in recent times, driverless automobiles nonetheless are inclined to fall quick in security and reliability.

Regulators are probing quite a few deadly incidents involving self-driving automobiles, together with Tesla fashions with Autopilot and Full Self-Driving options.

Talking to the Guardian in 2022, Matthew Avery, director of analysis at Thatcham Analysis, defined why driverless automobiles have been so difficult to refine:

“Primary is that these things is more durable than producers realized,” Avery states.

Avery estimates that round 80% of autonomous driving features contain comparatively easy duties like lane following and primary impediment avoidance.

The subsequent actions, nevertheless, are rather more difficult. “The final 10% is de facto troublesome,” Avery emphasizes, like “once you’ve bought, , a cow standing in the midst of the street that doesn’t need to transfer.”

Positive, cows aren’t fear-inspiring in their very own proper. However any concentrating driver would in all probability fancy their possibilities at stopping in the event that they’re hurtling in direction of one at pace.

An AI system depends on its coaching and expertise to see the cow and make the suitable resolution. That course of isn’t at all times fast or dependable sufficient, therefore a excessive danger of collisions and accidents, particularly when the system encounters one thing it’s not educated to grasp.

Imbuing AI methods with concern may present another, faster, and extra environment friendly technique of reaching that call.

In organic methods, concern triggers speedy, instinctive responses that don’t require complicated processing. For example, a human driver may instinctively brake on the mere suggestion of an impediment, even earlier than absolutely processing what it’s.

This near-instantaneous response, pushed by a concern response, may very well be the distinction between a near-miss and a collision.

Furthermore, fear-based responses in nature are extremely adaptable and generalize nicely to novel conditions.

An AI system with a fear-like mechanism may very well be higher geared up to deal with unexpected situations.

Deconstructing concern: insights from the fruit fly

We’re removed from creating synthetic methods that replicate the built-in, specialised neural areas in organic brains, however that doesn’t imply we will’t mannequin these mechanisms in different methods.

So, let’s zoom out from the amygdala and take a look at how invertebrates – small bugs, for instance – detect and course of concern.

Whereas they don’t have a construction instantly analogous to the amygdala, that doesn’t imply they lack circuitry that achieves an analogous goal.

For instance, current research into the concern responses of Drosophila melanogaster, the widespread fruit fly, yielded intriguing insights into the elemental constructing blocks of primitive emotion.

In an experiment carried out at Caltech in 2015, researchers led by David Anderson uncovered flies to an overhead shadow designed to imitate an approaching predator.

Utilizing high-speed cameras and machine imaginative and prescient algorithms, they meticulously analyzed the flies’ conduct, on the lookout for indicators of what Anderson calls “emotion primitives” – the essential parts of an emotional state.

Remarkably, the flies exhibited a set of behaviors that carefully paralleled the concern responses seen in mammals.

When the shadow appeared, the flies froze in place, and their wings cocked at an angle to arrange for a fast escape.

Because the risk persevered, some flies took flight, darting away from the shadow at excessive pace. Others remained frozen for an prolonged interval, suggesting a state of heightened arousal and vigilance.

Crucially, these responses weren’t mere reflexes triggered routinely by the visible stimulus. As an alternative, they appeared to mirror a permanent inside state, a type of “fly concern” that persevered even after the risk had handed.

This was evident in the truth that the flies’ heightened defensive behaviors may very well be elicited by a unique stimulus (a puff of air) even minutes after the preliminary shadow publicity.

Furthermore, the depth and length of the concern response scaled with the extent of risk. Flies uncovered to a number of shadow shows confirmed progressively stronger and longer-lasting defensive behaviors, indicating a type of “concern studying” that allowed them to calibrate their response primarily based on the severity and frequency of the hazard.

As Anderson and his crew argue, these findings counsel that the constructing blocks of emotional states – persistence, scalability, and generalization – are current even within the easiest creatures.

If we will decode how less complicated organisms like fruit flies course of and reply to threats, we will doubtlessly extract the core rules of adaptive, self-preserving conduct.

Primitive types of concern may very well be utilized to develop AI methods which are extra sturdy, safer, and attuned to real-world dangers and challenges.

Infusing AI with concern circuitry

It’s a terrific principle, however can AI be imbued with an genuine, useful type of ‘concern’ in follow?

One intriguing examine examined precisely that with the intention of bettering the protection of driverless automobiles and different autonomous methods.

“Worry-Neuro-Impressed Reinforcement Studying for Protected Autonomous Driving,” led by Chen Lv at Nanyang Technological College, Singapore, developed a fear-neuro-inspired reinforcement studying (FNI-RL) framework for bettering the efficiency of driverless automobiles.

By constructing AI methods that may acknowledge and reply to the delicate cues and patterns that set off human defensive driving – what they time period “concern neurons” – we might be able to create self-driving automobiles that navigate the street with the intuitive warning and danger sensitivity they want.

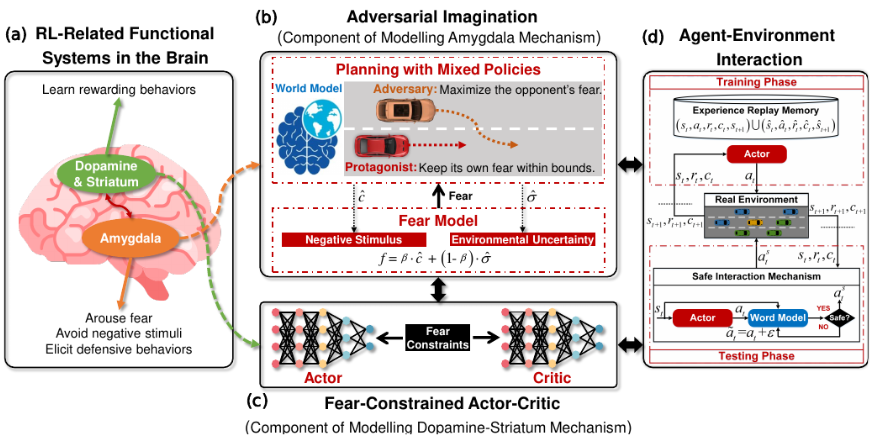

The FNI-RL framework interprets key rules of the mind’s concern circuitry right into a computational mannequin of threat-sensitive driving, permitting an autonomous car to be taught and deploy adaptive defensive methods in actual time.

It entails three key parts modeled after core components of the neural concern response:

- A “concern mannequin” that learns to acknowledge and assess driving conditions that sign heightened collision danger, taking part in a job analogous to the threat-detection features of the amygdala.

- An “adversarial creativeness” module that mentally simulates harmful situations, permitting the system to soundly “follow” defensive maneuvers with out real-world penalties – a type of risk-free studying paying homage to the psychological rehearsal capacities of human drivers.

- A “fear-constrained” decision-making engine that weighs potential actions not simply by their instantly anticipated rewards (e.g. progress in direction of a vacation spot), but in addition by their assessed stage of danger as gauged by the concern mannequin and adversarial creativeness parts. This mirrors the amygdala’s function in flexibly guiding conduct primarily based on an ongoing calculus of risk and security.

To place this method by way of its paces, the researchers examined it in a collection of high-fidelity driving simulations that includes difficult, safety-critical situations:

- Sudden cut-ins and swerves by aggressive drivers

- Erratic pedestrians jaywalking into visitors

- Sharp turns and blind corners with restricted visibility

- Slick roads and poor climate circumstances

Throughout these assessments, the FNI-RL-equipped automobiles demonstrated exceptional security efficiency, constantly outperforming human drivers and conventional reinforcement studying (RL) methods to keep away from collisions and follow defensive driving expertise.

In a single putting instance, the FNI-RL system efficiently navigated a sudden, high-speed visitors merger with a 90% success price, in comparison with simply 60% for a state-of-the-art RL baseline.

It even achieved security positive aspects with out sacrificing driving efficiency or passenger consolation.

In different assessments, the researchers probed the FNI-RL system’s capacity to be taught and generalize defensive methods throughout driving environments.

In a simulation of a busy metropolis intersection, the AI realized in only a few trials to acknowledge the telltale indicators of a reckless driver – sudden lane modifications, aggressive acceleration – and pre-emptively alter its personal conduct to provide a wider berth.

Remarkably, the system was then in a position to switch this realized wariness to a novel freeway driving state of affairs, routinely registering harmful cut-in maneuvers and responding with evasive motion.

This demonstrates the potential of neurally-inspired emotional intelligence to reinforce the protection and robustness of autonomous driving methods.

By endowing automobiles with a “digital amygdala” tuned to the visceral cues of street danger, we might be able to create self-driving automobiles that may navigate the challenges of the open street with a fluid, proactive defensive consciousness.

In the direction of a science of emotionally-aware robotics

Whereas current AI developments have relied on brute-force computational energy, researchers at the moment are drawing inspiration from human emotional responses to create smarter and extra adaptive synthetic methods.

This paradigm, named “bio-inspired AI,” extends past self-driving automobiles to fields like manufacturing, healthcare, and area exploration.

There are numerous thrilling angles to discover. For instance, robotic arms are being developed with “digital nociceptors” that mimic ache receptors, enabling swift reactions to potential harm.

When it comes to {hardware}, IBM’s bio-inspired analog chips use “memristors” to retailer various numerical values, lowering knowledge transmission between reminiscence and processor.

Equally, researchers on the Indian Institute of Know-how, Bombay, have designed a chip for Spiking Neural Networks (SNNs), which carefully mimic organic neuron perform.

Professor Udayan Ganguly reviews this chip achieves “5,000 instances decrease vitality per spike at an analogous space and 10 instances decrease standby energy” in comparison with standard designs.

These developments in neuromorphic computing convey us nearer to what Ganguly describes as “a particularly low-power neurosynaptic core and real-time on-chip studying mechanism,” key components for autonomous, biologically impressed neural networks.

Combining nature-inspired AI expertise with architectures knowledgeable by pure emotional states like concern or curiosity might thrust AI into a wholly new state of being.

As researchers push these boundaries, they’re not simply creating extra environment friendly machines – they’re doubtlessly birthing a brand new type of intelligence.

As this line of analysis evolves, autonomous machines may roam the world amongst us, reacting to unpredictable environmental cues with curiosity, concern, and different feelings thought of distinctly human.

The impacts? That’s one other story altogether.