We hear quite a bit about AI security, however does that imply it’s closely featured in analysis?

A brand new examine from Georgetown College’s Rising Expertise Observatory means that, regardless of the noise, AI security analysis occupies however a tiny minority of the trade’s analysis focus.

The researchers analyzed over 260 million scholarly publications and located {that a} mere 2% of AI-related papers printed between 2017 and 2022 straight addressed matters associated to AI security, ethics, robustness, or governance.

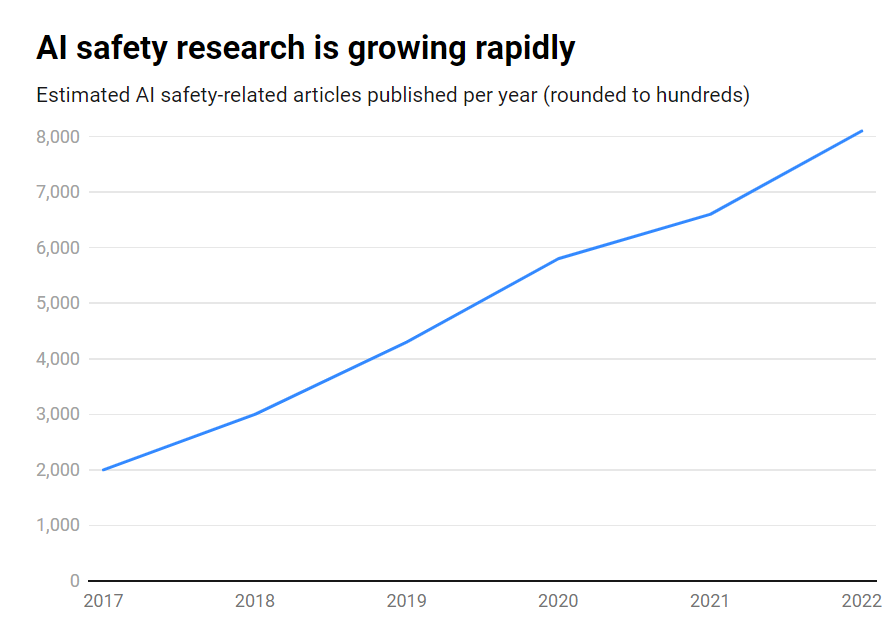

Whereas the variety of AI security publications grew a formidable 315% over that interval, from round 1,800 to over 7,000 per 12 months, it stays a peripheral concern.

Listed here are the important thing findings:

- Solely 2% of AI analysis from 2017-2022 targeted on AI security

- AI security analysis grew 315% in that interval, however is dwarfed by general AI analysis

- The US leads in AI security analysis, whereas China lags behind

- Key challenges embrace robustness, equity, transparency, and sustaining human management

Many main AI researchers and ethicists have warned of existential dangers if synthetic common intelligence (AGI) is developed with out adequate safeguards and precautions.

Think about an AGI system that is ready to recursively enhance itself, quickly exceeding human intelligence whereas pursuing objectives misaligned with our values. It’s a state of affairs that some argue might spiral out of our management.

It’s not one-way site visitors, nonetheless. In actual fact, a lot of AI researchers consider AI security is overhyped.

Past that, some even assume the hype has been manufactured to assist Massive Tech implement rules and get rid of grassroots and open-source rivals.

Nonetheless, even at present’s slender AI methods, educated on previous knowledge, can exhibit biases, produce dangerous content material, violate privateness, and be used maliciously.

So, whereas AI security must look into the longer term, it additionally wants to handle dangers within the right here and now, which is arguably inadequate as deep fakes, bias, and different points proceed to loom giant.

Efficient AI security analysis wants to handle nearer-term challenges in addition to longer-term speculative dangers.

The US leads AI security analysis

Drilling down into the information, the US is the clear chief in AI security analysis, dwelling to 40% of associated publications in comparison with 12% from China.

Nonetheless, China’s security output lags far behind its general AI analysis – whereas 5% of American AI analysis touched on security, just one% of China’s did.

One might speculate that probing Chinese language analysis is an altogether tough activity. Plus, China has been proactive about regulation – arguably extra so than the US – so this knowledge may not give the nation’s AI trade a good listening to.

On the establishment degree, Carnegie Mellon College, Google, MIT, and Stanford lead the pack.

However globally, no group produced greater than 2% of the overall safety-related publications, highlighting the necessity for a bigger, extra concerted effort.

Security imbalances

So what could be carried out to right this imbalance?

That depends upon whether or not one thinks AI security is a urgent danger on par with nuclear warfare, pandemics, and many others. There is no such thing as a clear-cut reply to this query, making AI security a extremely speculative matter with little mutual settlement between researchers.

Security analysis and ethics are additionally considerably of a tangential area to machine studying, requiring totally different ability units, tutorial backgrounds, and many others., which is probably not nicely funded.

Closing the AI security hole may even require confronting questions round openness and secrecy in AI improvement.

The most important tech firms conduct in depth inside security analysis that has by no means been printed. Because the commercialization of AI heats up, firms have gotten extra protecting of their AI breakthroughs.

OpenAI, for one, was a analysis powerhouse in its early days.

The corporate used to provide in-depth unbiased audits of its merchandise, labeling biases, and dangers – corresponding to sexist bias in its CLIP mission.

Anthropic continues to be actively engaged in public AI security analysis, continuously publishing research on bias and jailbreaking.

DeepMind additionally documented the potential for AI fashions establishing ‘emergent objectives’ and actively contradicting their directions or turning into adversarial to their creators.

General, although, security has taken a backseat to progress as Silicon Valley lives by its motto to ‘transfer quick and break stuff.’

The Georgetown examine finally highlights that universities, governments, tech firms, and analysis funders want to speculate extra effort and cash in AI security.

Some have additionally referred to as for an worldwide physique for AI security, much like the Worldwide Atomic Vitality Company (IAEA), which was established after a sequence of nuclear incidents that made deep worldwide cooperation obligatory.

Will AI want its personal catastrophe to have interaction that degree of state and company cooperation? Let’s hope not.