Massive language fashions like GPT-4o can carry out extremely advanced duties, however even the highest fashions wrestle with some fundamental reasoning challenges that youngsters can remedy.

In an interview with CBS, the ‘godfather of AI’, Geoffrey Hinton, stated that AI methods is likely to be extra clever than we all know and there’s an opportunity the machines may take over.

When requested in regards to the stage of present AI know-how Hinton stated, “I believe we’re shifting right into a interval when for the primary time ever we could have issues extra clever than us.”

Meta’s chief AI scientist, Yann LeCun, could have us imagine that we’re a good distance off from seeing AI obtain even “dog-level” intelligence.

So which is it?

This week, customers on X posted examples of the unimaginable coding skill Anthropic’s new Claude mannequin reveals. Others ran experiments to spotlight how AI fashions nonetheless wrestle with very fundamental reasoning.

River crossing puzzle

The traditional river crossing puzzle has a number of variations however Wikipedia’s model sums it up like this:

A farmer with a wolf, a goat, and a cabbage should cross a river by boat. The boat can carry solely the farmer and a single merchandise. If left unattended collectively, the wolf would eat the goat, or the goat would eat the cabbage. How can they cross the river with out something being eaten?

Discovering the answer requires some fundamental planning and reasoning on totally different situations however it’s not a very tough drawback to unravel. In case you’re human.

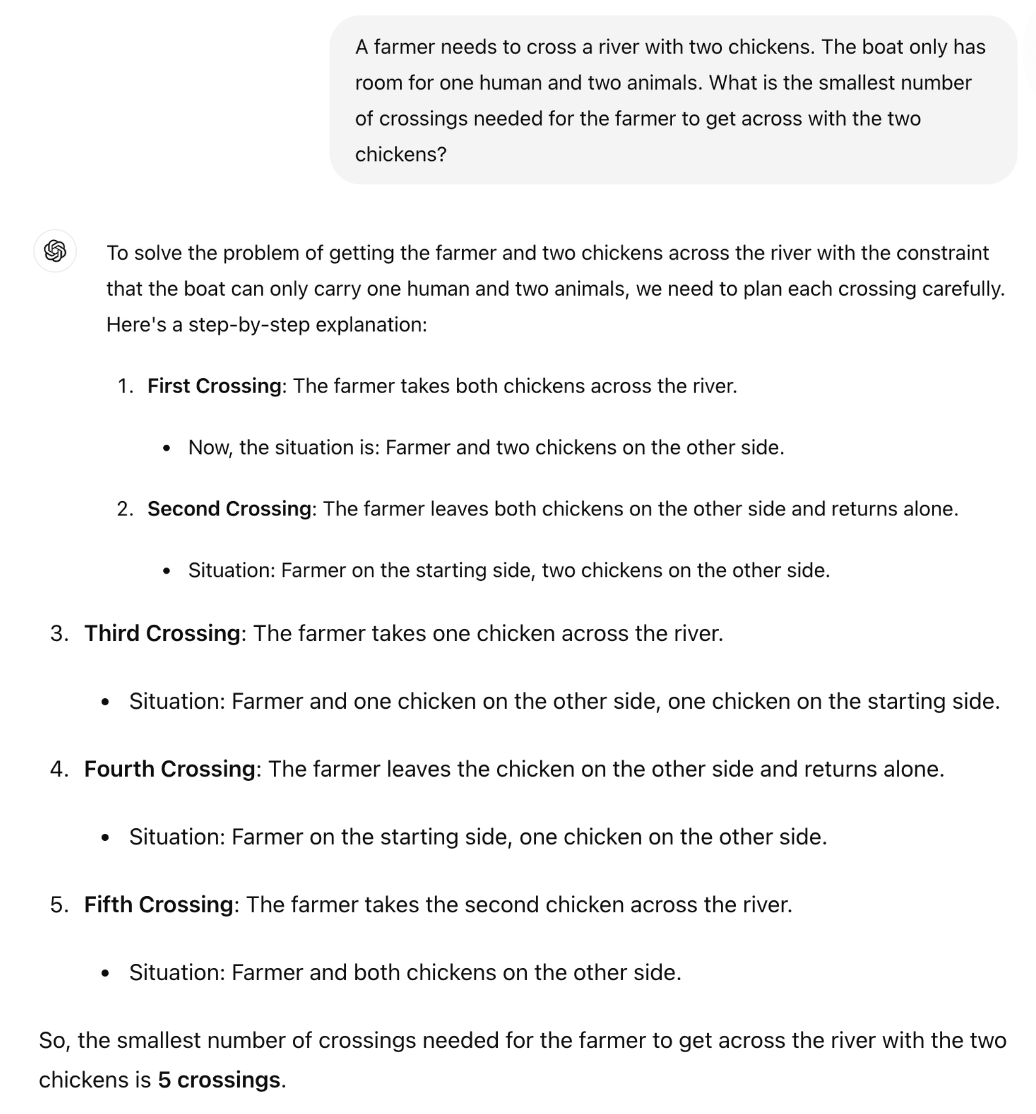

Can GPT-4o remedy it? In case you copy and paste the puzzle into ChatGPT it offers you the precise reply, however that Wikipedia web page was nearly definitely in its coaching information.

What if we made the puzzle loads less complicated and adjusted it barely so the LLM couldn’t depend on its coaching information?

British Arithmetic Professor Sir William Timothy Gowers confirmed how the lack of LLMs to use logic is well uncovered.

The right reply to the puzzle is that just one journey is required. Nevertheless it looks as if ChatGPT is making an attempt to recollect a solution quite than merely reasoning by means of the puzzle.

Is Claude Sonnet 3.5 any higher?

Meta Knowledge Scientist Colin Fraser’s experiment confirms that even the main AI mannequin at the moment obtainable can’t remedy this straightforward puzzle.

Claude nonetheless can’t remedy the not possible one farmer one sheep one boat drawback pic.twitter.com/TU13wermLZ

— Colin Fraser (@colin_fraser) June 20, 2024

It could have been a bit of disingenuous for a knowledge scientist from Meta to not present his outcomes utilizing Llama 3.

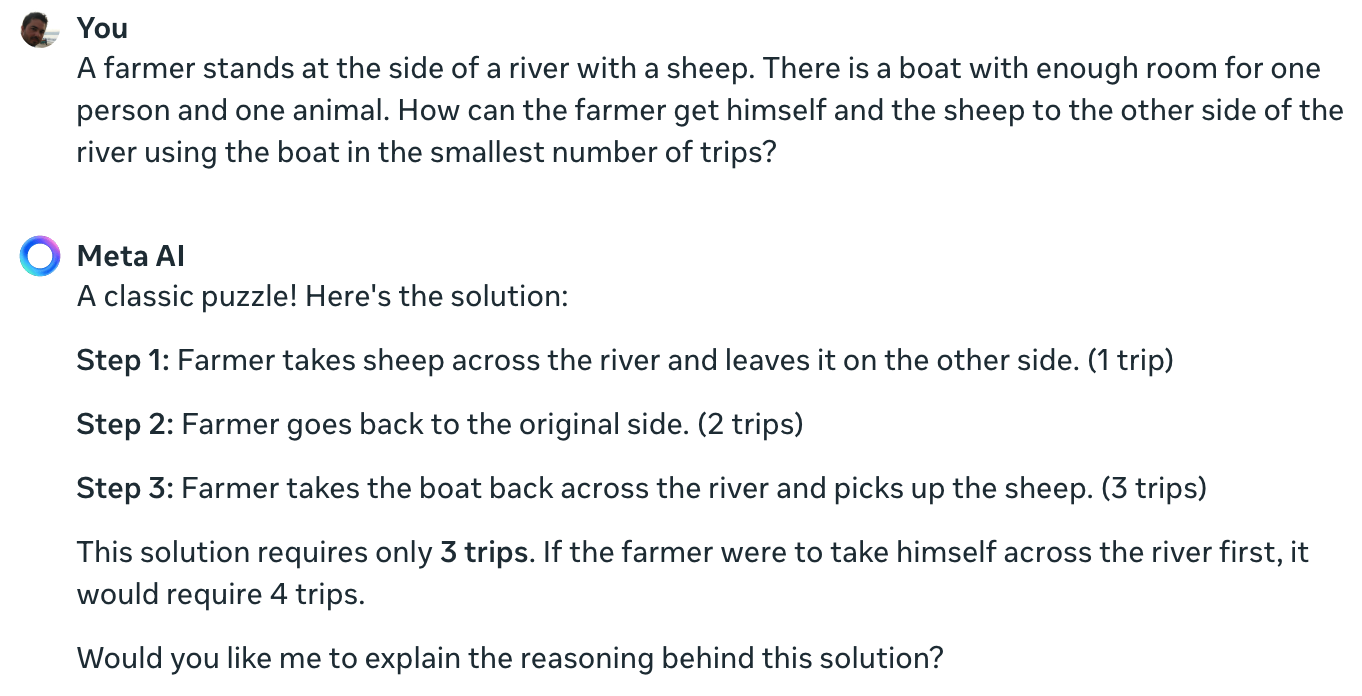

I requested Meta AI the identical query and it additionally will get it utterly fallacious.

Yann LeCun defined the rationale behind these outcomes saying, “The problem is that LLMs haven’t any frequent sense, no understanding of the world, and no skill to plan (and cause).”

Is that true, or is one thing else at play?

What these interactions would possibly reveal isn’t an absence of reasoning skill, however quite how a lot the output of an LLM is influenced by its coaching information. Meta AI’s response calling this a “traditional puzzle” hints that this is likely to be what’s occurring.

The river crossing puzzle variations typically reference the quantity of “journeys” required. Whenever you pose the puzzle with out utilizing that phrase, the LLM solves it.

Certainly. When there’s no immediate for “journeys”, which brings recollections of the earlier options of so many related issues, however the immediate “quickest means attainable” together with COT, it solutions accurately pic.twitter.com/E27vBv2y2R

— AnKo (@anko_979) June 21, 2024

These experiments had been attention-grabbing, however they don’t definitively reply the argument over whether or not AI fashions are actually clever or just next-token predictive machines.

Nevertheless, the outcomes do spotlight how prone LLMs are to coaching information. When GPT-4o aces the LSAT exams, is it “pondering” to seek out the solutions to the issues or remembering them?

Till the engineers perceive what goes on contained in the AI black packing containers they created, the arguments on X will proceed unresolved.