Why it issues: Superior AI capabilities typically require huge cloud-hosted fashions with billions and even trillions of parameters. However Microsoft is difficult that with the Phi-3 Mini, a pint-sized AI powerhouse that may run in your cellphone or laptop computer whereas delivering efficiency rivaling a number of the greatest language fashions on the market.

Weighing in at simply 3.8 billion parameters, Phi-3 Mini is the primary of three compact new AI fashions Microsoft has within the works. It could be small, however Microsoft claims this little overachiever can punch manner above its weight class, producing responses near what you’d get from a mannequin 10 occasions its measurement.

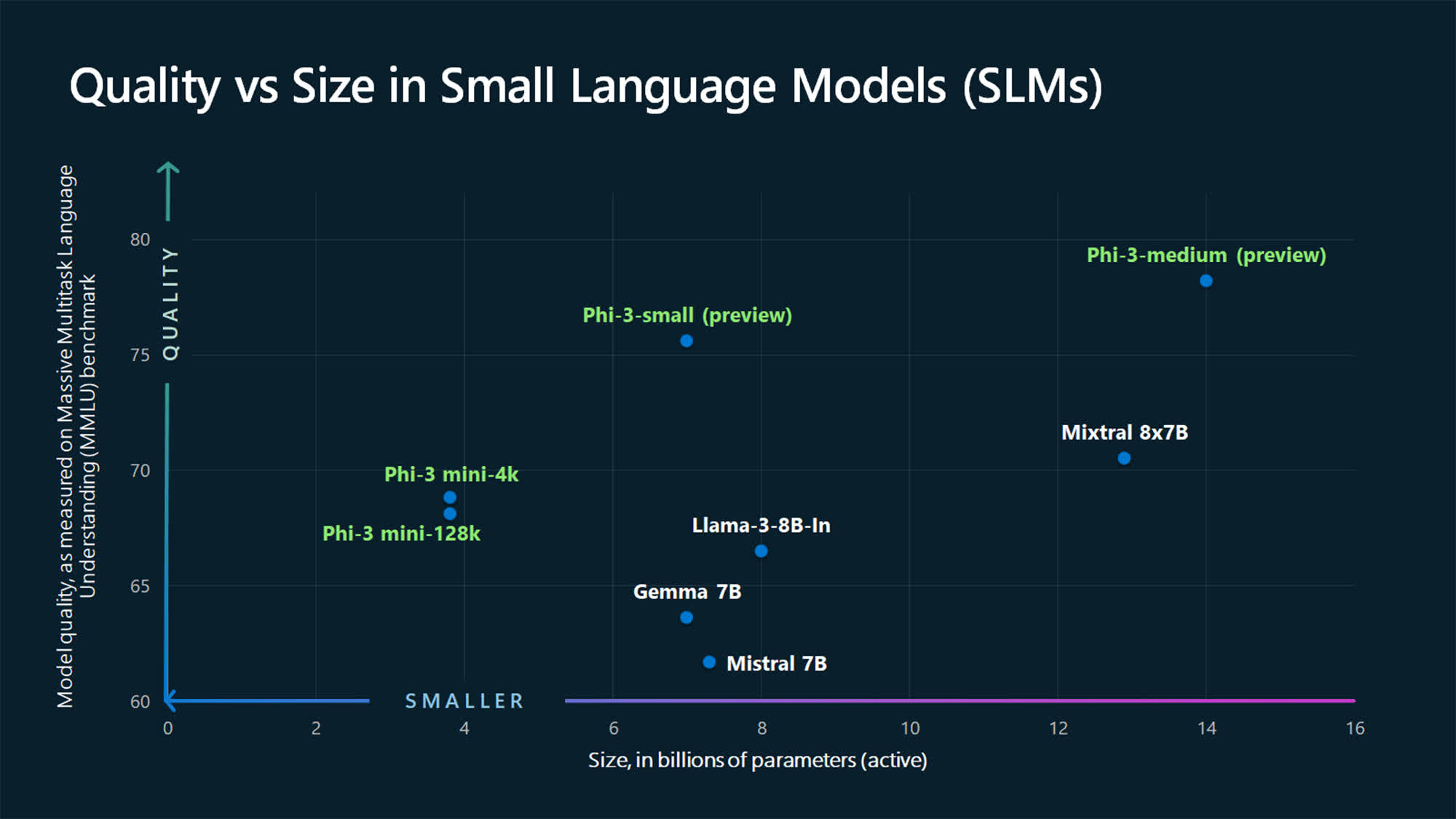

The tech large plans to comply with Mini up with Phi-3 Small (7 billion parameters) and Phi-3 Medium (14 billion) afterward. However even the three.8 billion-parameter Mini is shaping as much as be a serious participant, in response to Microsoft’s numbers.

These numbers present Phi-3 Mini holding its personal towards heavyweights just like the 175+ billion parameter GPT-3.5 that powers the free ChatGPT, in addition to French AI firm Mistral’s Mixtral 8x7B mannequin. It isn’t dangerous in any respect for a mannequin compact sufficient to run domestically with no cloud connection required.

So how precisely is measurement measured with regards to AI language fashions? All of it comes right down to parameters – the numerical values in a neural community that decide the way it processes and generates textual content. Extra parameters typically imply a better understanding of your queries, but additionally elevated computational calls for. Nevertheless, this is not all the time the case, as OpenAI CEO Sam Altman defined.

Whereas behemoth fashions like OpenAI’s GPT-4 and Anthropic’s Claude 3 Opus are rumored to pack a number of hundred billion parameters, Phi-3 Mini maxes out at simply 3.8 billion. But Microsoft’s researchers managed to get wonderful outcomes via an revolutionary method to refining the coaching knowledge itself.

By focusing the comparatively tiny 3.8 billion parameter mannequin on a particularly curated dataset of high-quality internet content material and synthetically generated materials developed from earlier Phi fashions, they gave Phi-3 Mini outsize expertise for its lean stature. It could actually deal with as much as 4,000 tokens of context at a time, with a particular 128k token model additionally accessible.

“As a result of it is studying from textbook-like materials, from high quality paperwork that specify issues very, very effectively, you make the duty of the language mannequin to learn and perceive this materials a lot simpler,” explains Microsoft.

The implications may very well be enormous. If tiny AI fashions like Phi-3 Mini actually can ship efficiency aggressive with at the moment’s billion-plus parameter behemoths, we could possibly go away the energy-guzzling cloud AI farms behind for on a regular basis duties.

Microsoft has already made the mannequin accessible to place via its paces on Azure cloud, in addition to through open-source AI mannequin hosts Hugging Face and Ollama.