Backside line: Current developments in AI methods have considerably improved their skill to acknowledge and analyze advanced photographs. Nonetheless, a brand new paper reveals that many state-of-the-art visible studying fashions wrestle with easy visible duties that people discover simple, like counting the variety of strains and rows in a grid or what number of instances two strains intersect.

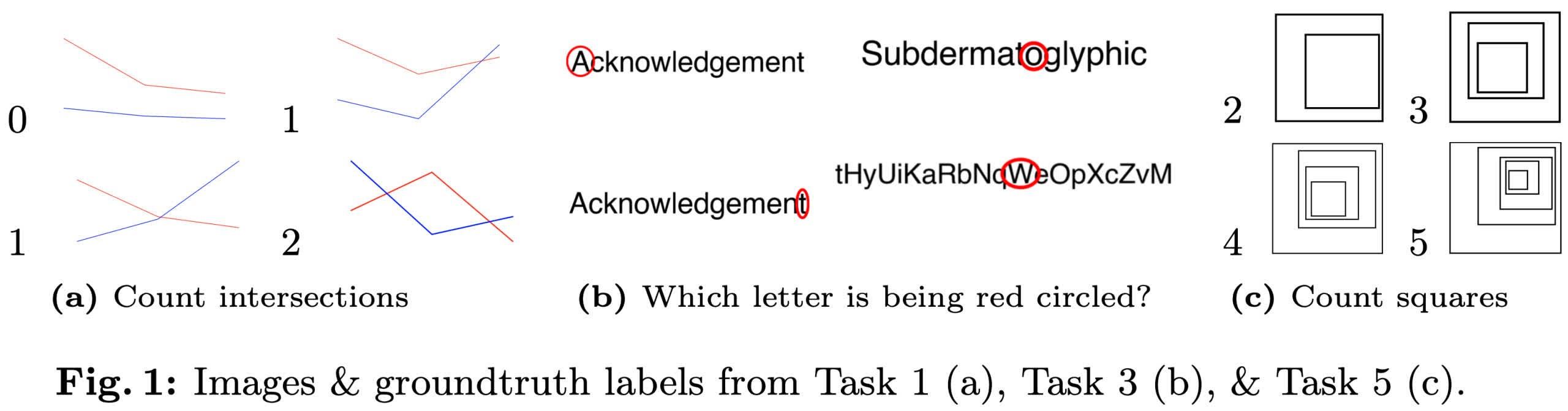

Researchers from Auburn College and the College of Alberta lately printed a paper titled “Imaginative and prescient language fashions are blind.” The examine used eight easy visible acuity checks to focus on deficiencies in visible studying fashions (VLM). The duties included counting intersecting strains, figuring out circled letters, counting nested shapes and others. These checks have objectively definitive solutions and require minimal information past fundamental 2D shapes.

To keep away from fashions fixing these duties by memorization, the researchers generated the checks utilizing customized code fairly than pre-existing photographs. They evaluated 4 VLM fashions, together with GPT-4o, Gemini-1.5 Professional, Sonnet-3, and Sonnet-3.5. The outcomes confirmed that not one of the fashions achieved good accuracy, and efficiency assorted considerably relying on the duty.

For instance, the best-performing mannequin may solely rely the rows and columns in a clean grid with lower than 60 p.c accuracy. Conversely, Gemini-1.5 Professional approached human-level efficiency by appropriately figuring out circled letters 93 p.c of the time.

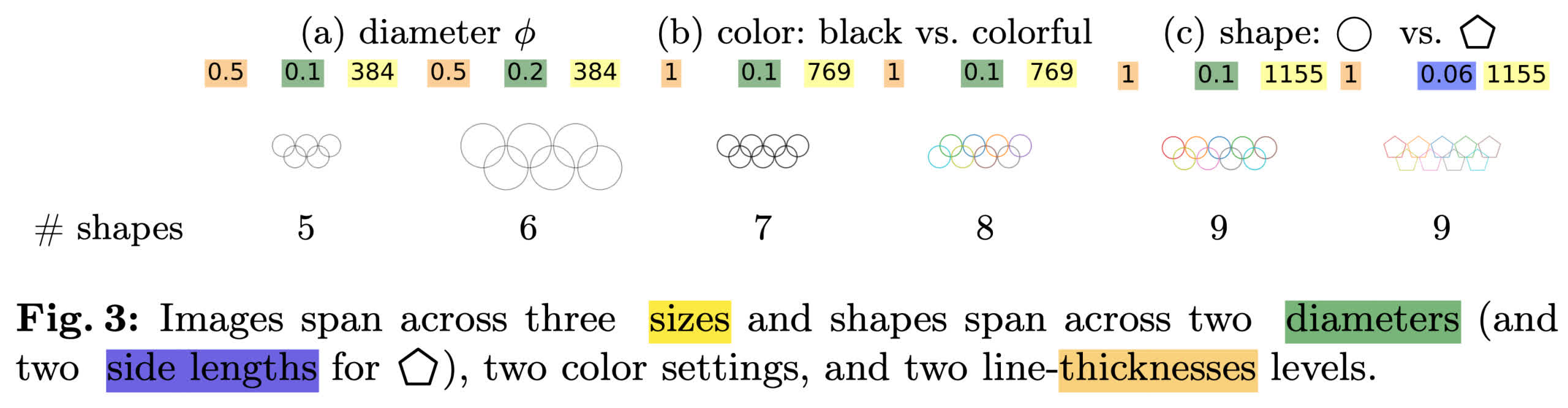

Moreover, even minor modifications to the duties resulted in important efficiency adjustments. Whereas all fashions may appropriately determine 5 overlapping circles, accuracy dropped beneath 50 p.c when the variety of circles elevated to 6 or extra (above). The researchers theorize that the drop in accuracy could be attributable to a bias towards the 5 interlocking rings of the Olympic brand. Some fashions even supplied nonsensical solutions, corresponding to “9,” “n,” or “©” for the circled letter in “Subdermatoglyphic” (beneath).

These findings underscore a major limitation within the skill of VLMs to deal with low-level summary visible duties. The conduct is harking back to related functionality gaps in massive language fashions, which may generate coherent textual content summaries however fail fundamental math and spelling questions. The researchers hypothesized that these gaps would possibly stem from the fashions’ incapability to generalize past their coaching information. Nonetheless, fine-tuning a mannequin with particular photographs from one of many duties (the 2 circles touching check) solely modestly improved accuracy from 17 to 37 p.c, indicating that the mannequin overfits the coaching set however fails to generalize.

The researchers suggest that these functionality gaps in VLMs could be as a result of “late fusion” method of integrating imaginative and prescient encoders onto pre-trained language fashions. They counsel that an “early fusion” technique, combining visible and language coaching from the start, may enhance efficiency on low-level visible duties. Nonetheless, they didn’t present an evaluation to help this suggestion.

You may view the outcomes and different examples on the crew’s web site.