Because the period of generative AI marches on, a broad vary of firms have joined the fray, and the fashions themselves have turn out to be more and more various.

Amidst this AI increase, many firms have touted their fashions as “open supply,” however what does this actually imply in follow?

The idea of open supply has its roots within the software program growth neighborhood. Conventional open-source software program makes the supply code freely accessible for anybody to view, modify, and distribute.

In essence, open supply is a collaborative knowledge-sharing machine fueled by software program innovation, which has led to developments just like the Linux working system, Firefox internet browser, and Python programming language.

Nevertheless, making use of the open-source ethos to as we speak’s large AI fashions is much from simple.

These techniques are sometimes skilled on huge datasets containing terabytes or petabytes of information, utilizing complicated neural community architectures with billions of parameters.

The computing assets required price thousands and thousands of {dollars}, the expertise is scarce, and mental property is usually well-guarded.

We are able to observe this in OpenAI, which, as its namesake suggests, was once an AI analysis lab largely devoted to the open-source ethos.

Nevertheless, that ethos rapidly eroded as soon as the corporate smelled the cash and wanted to draw funding to gas its targets.

Why? As a result of open-source merchandise are usually not geared in the direction of revenue, and AI is pricey and useful.

Nevertheless, as generative AI has exploded, firms like Mistral, Meta, BLOOM, and xAI are releasing open-source fashions to additional analysis whereas stopping firms like Microsoft and Google from hoarding an excessive amount of affect.

However what number of of those fashions are really open-source in nature, and never simply by identify?

Clarifying how open open-source fashions actually are

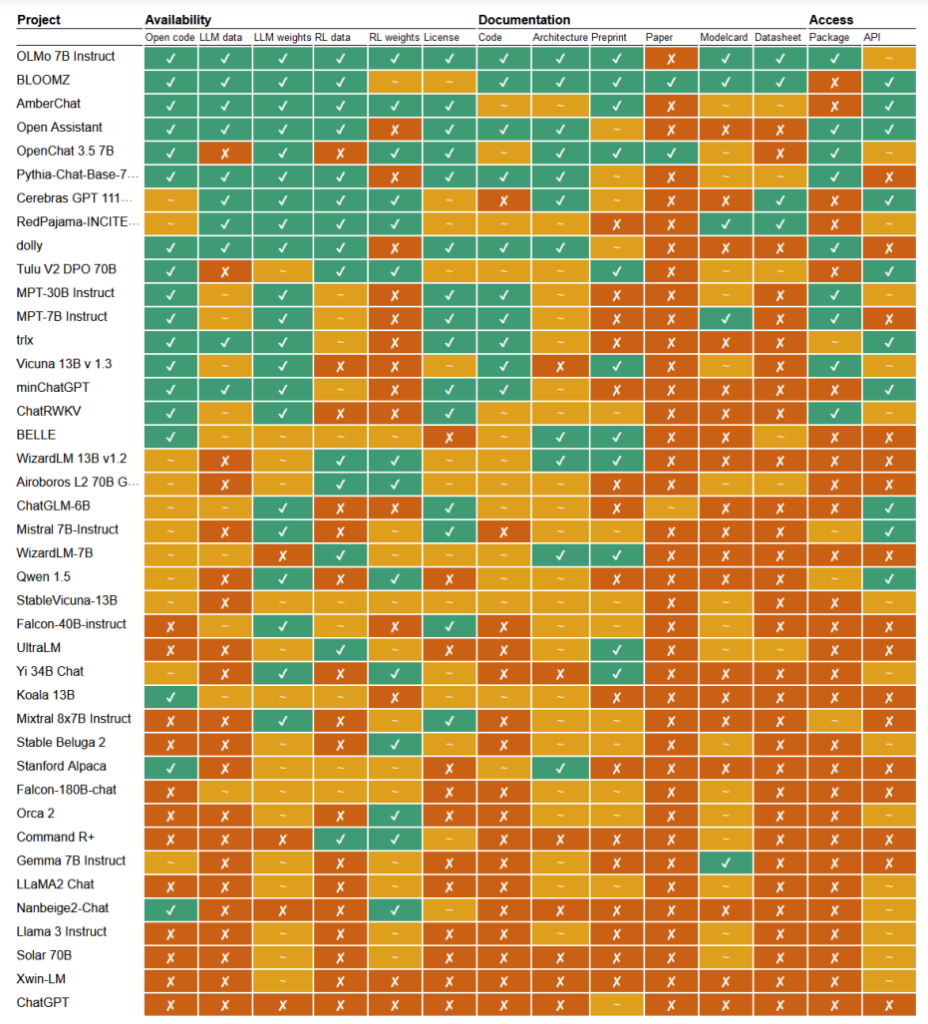

In a latest research, researchers Mark Dingemanse and Andreas Liesenfeld from Radboud College, Netherlands, analyzed quite a few distinguished AI fashions to discover how open they’re. They studied a number of standards, comparable to the supply of supply code, coaching information, mannequin weights, analysis papers, and APIs.

For instance, Meta’s LLaMA mannequin and Google’s Gemma have been discovered to be merely “open weight,” – which means the skilled mannequin is publicly launched to be used with out full transparency into its code, coaching course of, information, and fine-tuning strategies.

On the opposite finish of the spectrum, the researchers highlighted BLOOM, a big multilingual mannequin developed by a collaboration of over 1,000 researchers worldwide, as an exemplar of true open-source AI. Each ingredient of the mannequin is freely accessible for inspection and additional analysis.

The paper assessed some 30+ fashions (each textual content and picture), however these show the immense variation inside people who declare to be open-source:

- BloomZ (BigScience): Totally open throughout all standards, together with code, coaching information, mannequin weights, analysis papers, and API. Highlighted as an exemplar of really open-source AI.

- OLMo (Allen Institute for AI): Open code, coaching information, weights, and analysis papers. API solely partially open.

- Mistral 7B-Instruct (Mistral AI): Open mannequin weights and API. Code and analysis papers solely partially open. Coaching information unavailable.

- Orca 2 (Microsoft): Partially open mannequin weights and analysis papers. Code, coaching information, and API closed.

- Gemma 7B instruct (Google): Partially open code and weights. Coaching information, analysis papers, and API closed. Described as “open” by Google quite than “open supply”.

- Llama 3 Instruct (Meta): Partially open weights. Code, coaching information, analysis papers, and API closed. An instance of an “open weight” mannequin with out fuller transparency.

A scarcity of transparency

The dearth of transparency surrounding AI fashions, particularly these developed by giant tech firms, raises critical considerations about accountability and oversight.

With out full entry to the mannequin’s code, coaching information, and different key parts, it turns into extraordinarily difficult to know how these fashions work and make selections. This makes it tough to establish and handle potential biases, errors, or misuse of copyrighted materials.

Nevertheless, since coaching information is stored underneath lock and key, figuring out particular information inside this materials is sort of inconceivable.

The New York Occasions’s latest lawsuit towards OpenAI demonstrates the real-world penalties of this problem. OpenAI accused the NYT of utilizing immediate engineering assaults to reveal coaching information and coax ChatGPT into reproducing its articles verbatim, thus proving that OpenAI’s coaching information accommodates copyright materials.

“The Occasions paid somebody to hack OpenAI‘s merchandise,” said OpenAI.

In response, Ian Crosby, the lead authorized counsel for the NYT, stated, “What OpenAI bizarrely mischaracterizes as ‘hacking’ is just utilizing OpenAI’s merchandise to search for proof that they stole and reproduced The Occasions’ copyrighted works. And that’s precisely what we discovered.”

Certainly, this is only one instance from an enormous stack of lawsuits which can be at the moment roadblocked partly resulting from AI fashions’ opaque, impenetrable nature.

That is simply the tip of the iceberg. With out sturdy transparency and accountability measures, we danger a future the place unexplainable AI techniques make selections that profoundly influence our lives, economic system, and society but stay shielded from scrutiny.

Requires openness

There have been requires firms like Google and OpenAI to grant entry to their fashions’ inner-workings for the needs of security analysis.

Nevertheless, the reality is that even AI firms don’t really perceive how their fashions work.

That is referred to as the “black field” downside, which arises when attempting to interpret and clarify the mannequin’s particular selections in a human-understandable manner.

For instance, a developer may know {that a} deep studying mannequin is correct and performs effectively, however they could wrestle to pinpoint precisely which options the mannequin makes use of to make its selections.

Anthropic, which developed the Claude fashions, not too long ago carried out an experiment to establish how Claude 3 Sonnet works, explaining, “We largely deal with AI fashions as a black field: one thing goes in and a response comes out, and it’s not clear why the mannequin gave that specific response as a substitute of one other. This makes it onerous to belief that these fashions are secure: if we don’t understand how they work, how do we all know they received’t give dangerous, biased, untruthful, or in any other case harmful responses? How can we belief that they’ll be secure and dependable?”

This experiment illustrated how AI builders don’t totally perceive the black field that’s their AI fashions and that objectively explaining outputs is an exceptionally difficult activity.

In truth, Anthropic estimated that it could devour extra computing energy to ‘open the black field’ than to coach the mannequin itself!

Builders try to actively fight the black-box downside by means of analysis like “Explainable AI” (XAI), which goals to develop strategies and instruments to make AI fashions extra clear and interpretable.

XAI strategies search to offer insights into the mannequin’s decision-making course of, spotlight probably the most influential options, and generate human-readable explanations. XAI has already been utilized to fashions deployed in high-stakes functions comparable to drug growth, the place understanding how a mannequin works may very well be pivotal for security.

Open-source initiatives are very important to XAI and different analysis that seeks to penetrate the black field and supply transparency into AI fashions.

With out entry to the mannequin’s code, coaching information, and different key parts, researchers can not develop and check strategies to clarify how AI techniques really work and establish particular information they have been skilled on.

Rules may confuse the open-source state of affairs additional

The European Union’s not too long ago handed AI Act is ready to introduce new rules for AI techniques, with provisions that particularly handle open-source fashions.

Below the Act, open-source general-purpose fashions as much as a sure measurement might be exempt from in depth transparency necessities.

Nevertheless, as Dingemanse and Liesenfeld level out of their research, the precise definition of “open supply AI” underneath the AI Act remains to be unclear and will turn out to be a degree of competition.

The Act at the moment defines open supply fashions as these launched underneath a “free and open” license that enables customers to change the mannequin. Nonetheless, it doesn’t specify necessities round entry to coaching information or different key parts.

This ambiguity leaves room for interpretation and potential lobbying by company pursuits. The researchers warn that refining the open supply definition within the AI Act “will in all probability kind a single strain level that might be focused by company lobbies and large firms.”

There’s a danger that with out clear, sturdy standards for what constitutes really open-source AI, the rules may inadvertently create loopholes or incentives for firms to interact in “open-washing” — claiming openness for the authorized and public relations advantages whereas nonetheless protecting necessary features of their fashions proprietary.

Furthermore, the worldwide nature of AI growth means differing rules throughout jurisdictions may additional complicate the panorama.

If main AI producers like america and China undertake divergent approaches to openness and transparency necessities, this might result in a fragmented ecosystem by which the diploma of openness varies broadly relying on the place a mannequin originates.

The research authors emphasize the necessity for regulators to interact carefully with the scientific neighborhood and different stakeholders to make sure that any open-source provisions in AI laws are grounded in a deep understanding of the know-how and the ideas of openness.

As Dingemanse and Liesenfeld conclude in a dialogue with Nature, “It’s truthful to say the time period open supply will tackle unprecedented authorized weight within the international locations ruled by the EU AI Act.”

How this performs out in follow could have momentous implications for the longer term route of AI analysis and deployment.